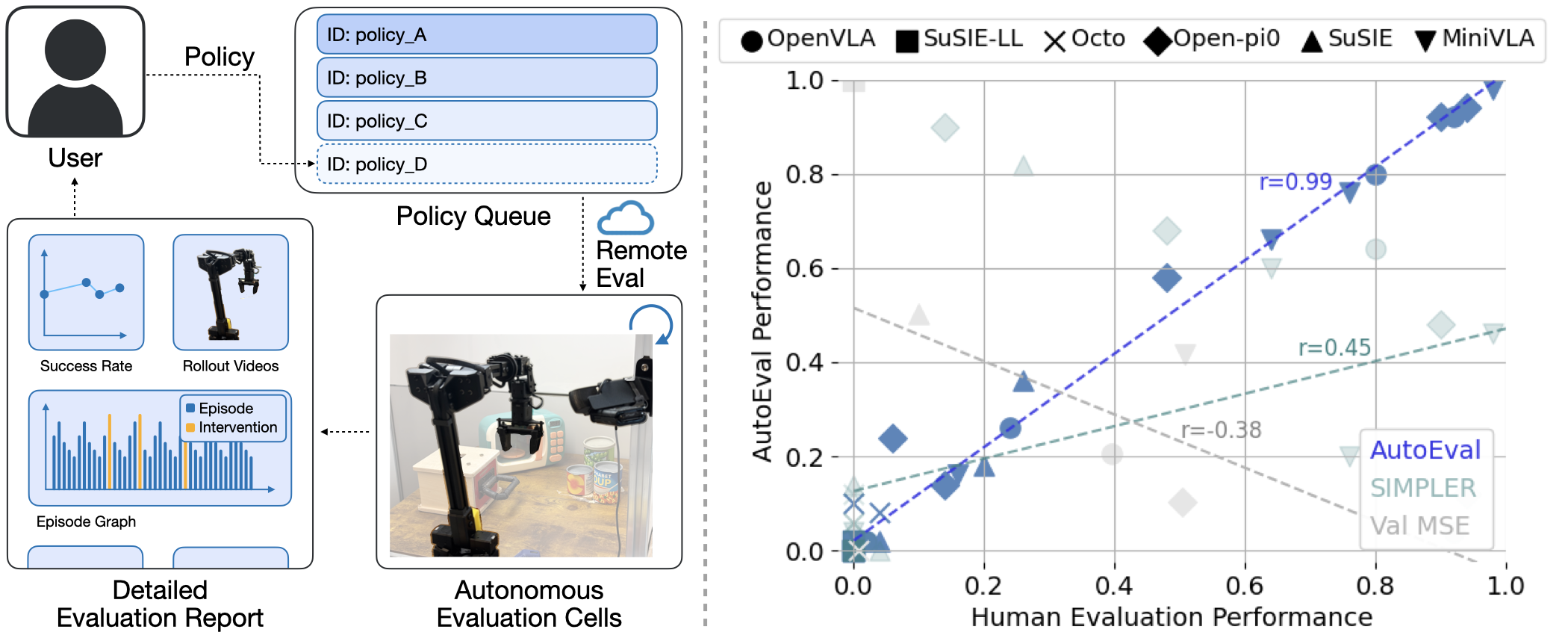

Overview

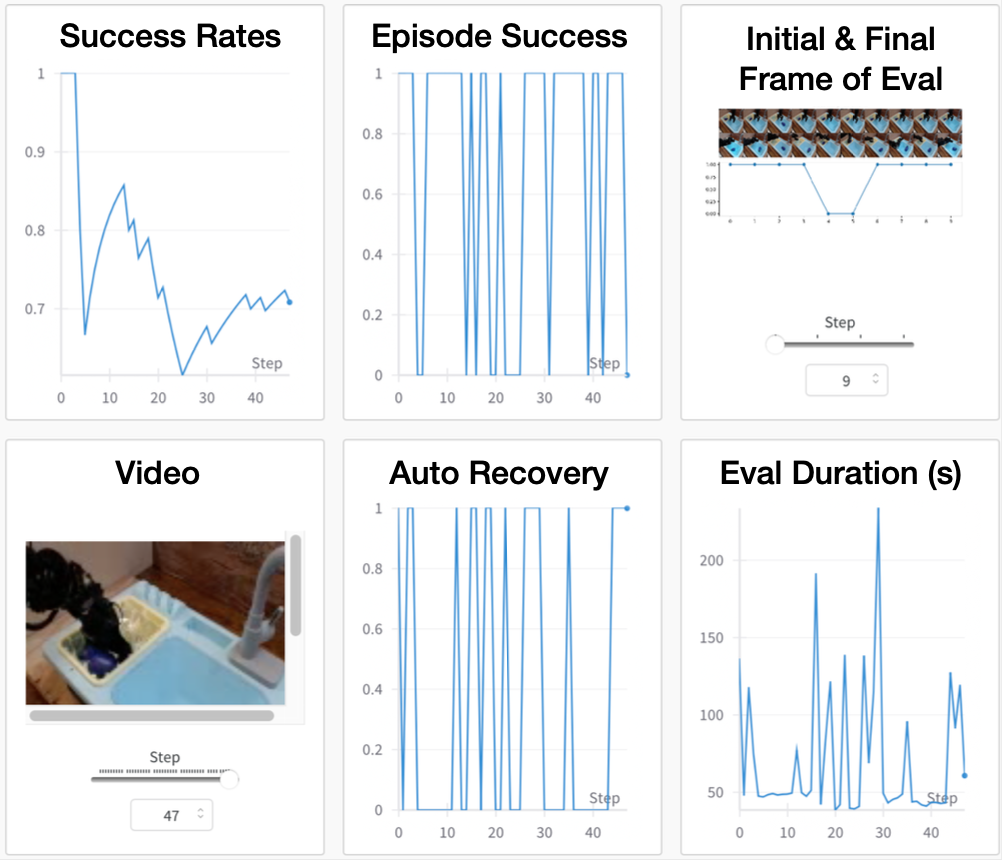

AutoEval is a system that autonomously evaluates generalist robot policies in the real world 24/7, and the evaluation results correspond closely to the ground truth human-run evaluations. AutoEval requires minimal human intervention by using robust success classifiers and reset policies fine-tuned from foundation models. We currently open access to two AutoEval stations with WidowX robots and four different tasks. Users can submit policies through an online dashboard, and get back detailed evaluation report (success rates, videos, etc.) after the evaluation job is done. See a detailed video below for more details.